Authors:

(1) Shengqiong Wu, NExT++, School of Computing, National University of Singapore;

(2) Hao Fei ,from NExT++, School of Computing at the National University of Singapore, serves as the corresponding author: [email protected].

(3) Leigang Qu, Hao Fei, NExT++, School of Computing, National University of Singapore is the corresponding author: [email protected];;

(4) Wei Ji, Hao Fei, NExT++, School of Computing, National University of Singapore is the corresponding author: [email protected];;

(5) Tat-Seng Chua, Hao Fei, NExT++, School of Computing, National University of Singapore is the corresponding author: [email protected];.

Table of Links

- Abstract and 1. Introduction

- 2 Related Work

3 Overall Architecture

4 Lightweight Multimodal Alignment Learning - 5 Modality-switching Instruction Tuning

- 5.1 Instruction Tuning

- 5.2 Instruction Dataset

- 6 Experiments

- 6.1 Any-to-any Multimodal Generation and 6.2 Example Demonstrations

- 7 Conclusion and References

2 Related Work

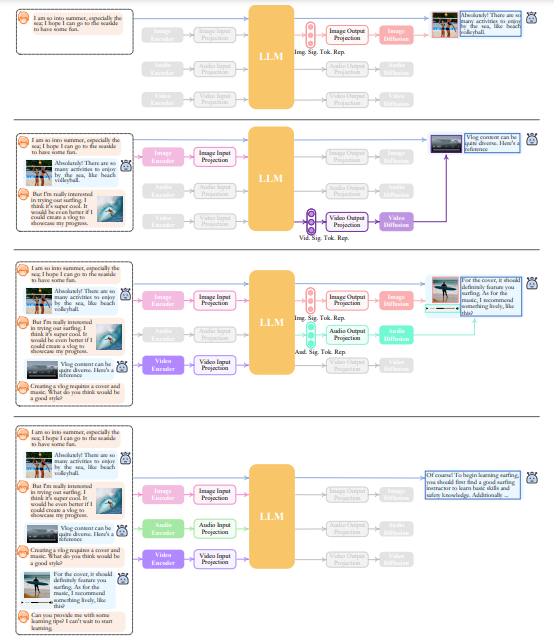

Cross-modal Understanding and Generation Our world is replete with multimodal information, wherein we continuously engage in the intricate task of comprehending and producing cross-modal content. The AI community correspondingly emerges varied forms of cross-modal learning tasks, such as Image/Video Captioning [99, 16, 56, 56, 27, 49], Image/Video Question Answering [94, 90, 48, 98, 3], Text-to-Image/Video/Speech Synthesis [74, 30, 84, 23, 17, 51, 33], Image-to-Video Synthesis [18, 37] and more, all of which have experienced rapid advancements in past decades. Researchers have proposed highly effective multimodal encoders, with the aim of constructing unified representations encompassing various modalities. Meanwhile, owing to the distinct feature spaces of different modalities, it is essential to undertake modality alignment learning. Moreover, to generate high-quality content, a multitude of strong-performing methods have been proposed, such as Transformer [82, 101, 17, 24], GANs [53, 7, 93, 110], VAEs [81, 67], Flow models [73, 6] and the current state-of-the-art diffusion models [31, 64, 57, 22, 68]. Especially, the diffusion-based methods have recently delivered remarkable performance in a plethora of cross-modal generation tasks, such as DALL-E [66], Stable Diffusion [68]. While all previous efforts of cross-modal learning are limited to the comprehension of multimodal inputs only, CoDi [78] lately presents groundbreaking development. Leveraging the power of diffusion models, CoDi possesses the ability to generate any combination of output modalities, including language, images, videos, or audio, from any combination of input modalities in parallel. Regrettably, CoDi might still fall short of achieving human-like deep reasoning of input content, with only parallel cross-modal feeding&generation.

Multimodal Large Language Models LLMs have already made profound impacts and revolutions on the entire AI community and beyond. The most notable LLMs, i.e., OpenAI’s ChatGPT [59] and GPT4 [60], with alignment techniques such as instruction tuning [61, 47, 104, 52] and reinforcement learning from human feedback (RLHF) [75], have demonstrated remarkable language understanding and reasoning abilities. And a series of open-source LLMs, e.g., Flan-T5 [13], Vicuna [12], LLaMA [80] and Alpaca [79], have greatly spurred advancement and made contributions to the community [109, 100]. Afterward, significant efforts have been made to construct LLMs dealing with multimodal inputs and tasks, leading to the development of MM-LLMs.

On the one hand, most of the researchers build fundamental MM-LLMs by aligning the well-trained encoders of various modalities to the textual feature space of LLMs, so as to let LLMs perceive other modal inputs [35, 109, 76, 40]. For example, Flamingo [1] uses a cross-attention layer to connect a frozen image encoder to the LLMs. BLIP-2 [43] employs a Q-Former to translate the input image queries to the LLMs. LLaVA [52] employs a simple projection scheme to connect image features into the word embedding space. There are also various similar practices for building MM-LLMs that are able to understand videos (e.g., Video-Chat [44] and Video-LLaMA [103]), audios (e.g., SpeechGPT [102]), etc. Profoundly, PandaGPT [77] achieves a comprehensive understanding of six different modalities simultaneously by integrating the multimodal encoder, i.e., ImageBind [25].

Nevertheless, these MM-LLMs all are subject to the limitation of only perceiving multimodal data, without generating content in arbitrary modalities. To achieve LLMs with both multimodal input and output, some thus explore employing LLMs as decision-makers, and utilizing existing off-theshelf multimodal encoders and decoders as tools to execute multimodal input and output, such as Visual-ChatGPT [88], HuggingGPT [72], and AudioGPT [34]. As aforementioned, passing messages between modules with pure texts (i.e., LLM textual instruction) under the discrete pipeline scheme will inevitably introduce noises. Also lacking comprehensive tuning across the whole system significantly limits the efficacy of semantics understanding. Our work takes the mutual benefits of both the above two types, i.e., learning an any-to-any MM-LLM in an end-to-end manner.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.