Authors:

(1) Liang Wang, Microsoft Corporation, and Correspondence to ([email protected]);

(2) Nan Yang, Microsoft Corporation, and correspondence to ([email protected]);

(3) Xiaolong Huang, Microsoft Corporation;

(4) Linjun Yang, Microsoft Corporation;

(5) Rangan Majumder, Microsoft Corporation;

(6) Furu Wei, Microsoft Corporation and Correspondence to ([email protected]).

Table of Links

3 Method

4 Experiments

4.1 Statistics of the Synthetic Data

4.2 Model Fine-tuning and Evaluation

5 Analysis

5.1 Is Contrastive Pre-training Necessary?

5.2 Extending to Long Text Embeddings and 5.3 Analysis of Training Hyperparameters

B Test Set Contamination Analysis

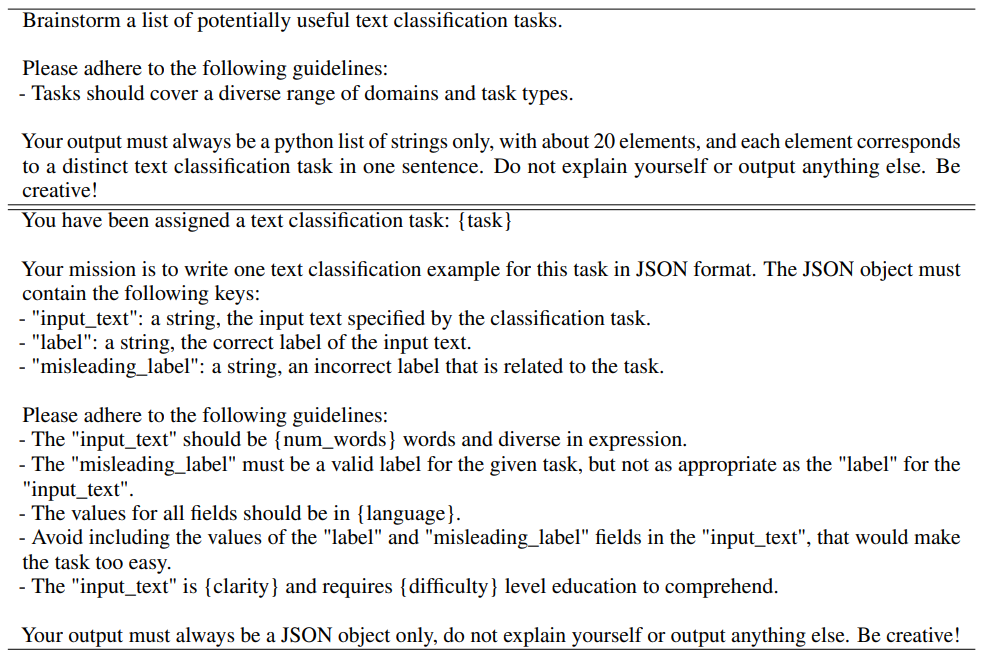

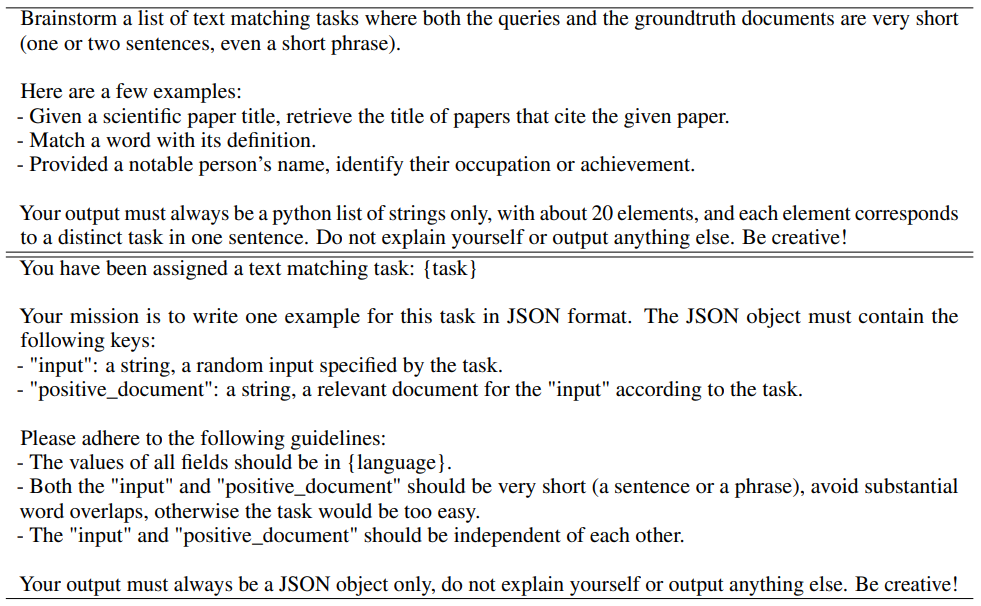

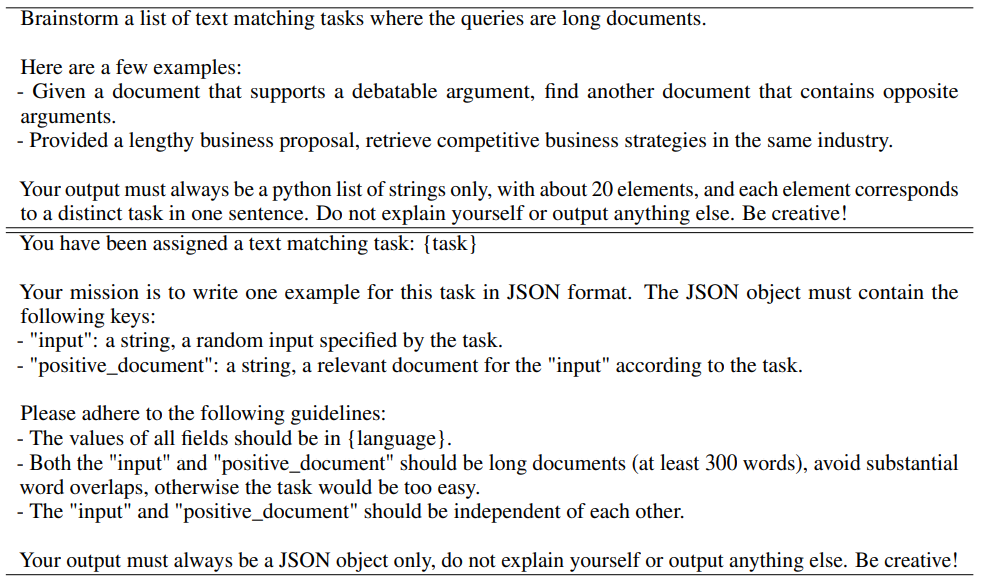

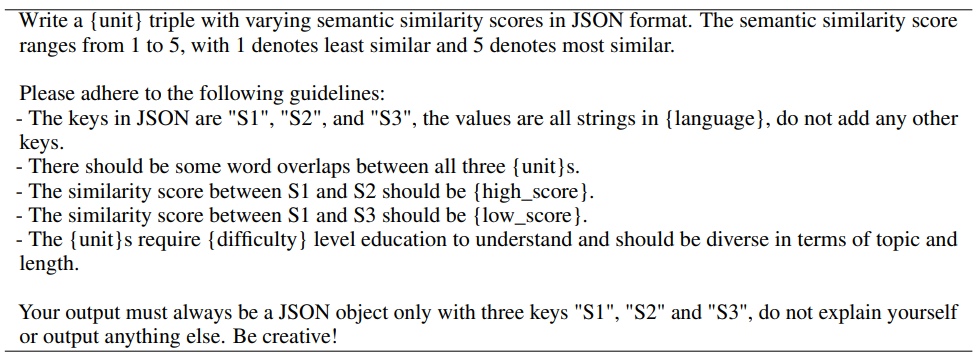

C Prompts for Synthetic Data Generation

D Instructions for Training and Evaluation

D Instructions for Training and Evaluation

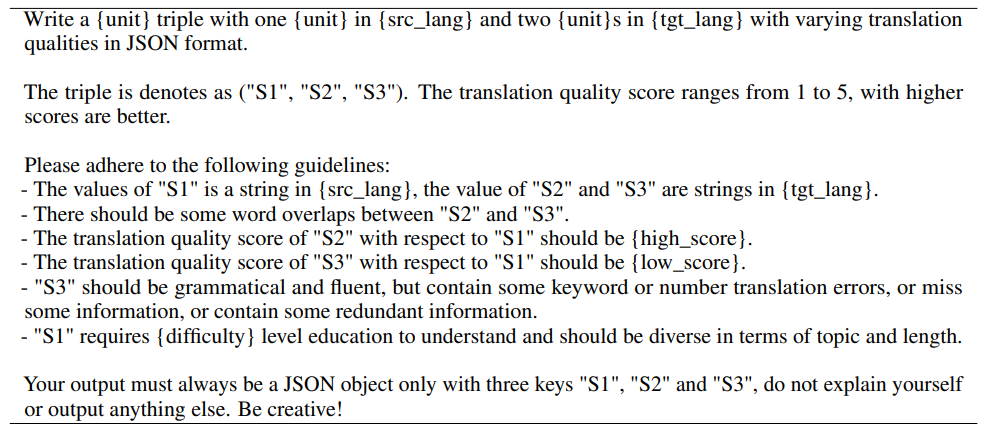

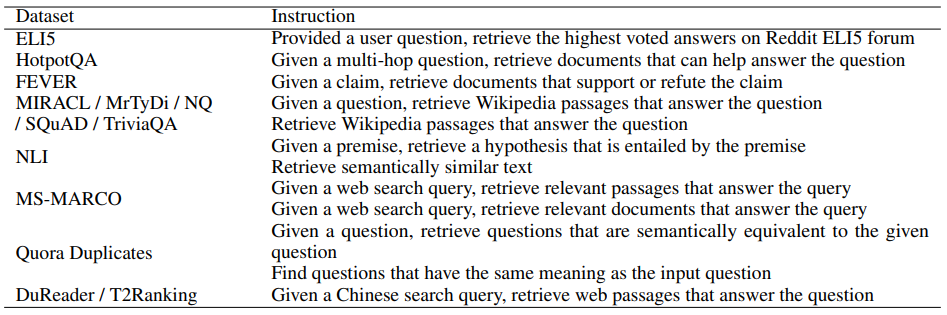

We manually write instructions for training datasets, as listed in Table 13. For evaluation datasets, the instructions are listed in Table 14.

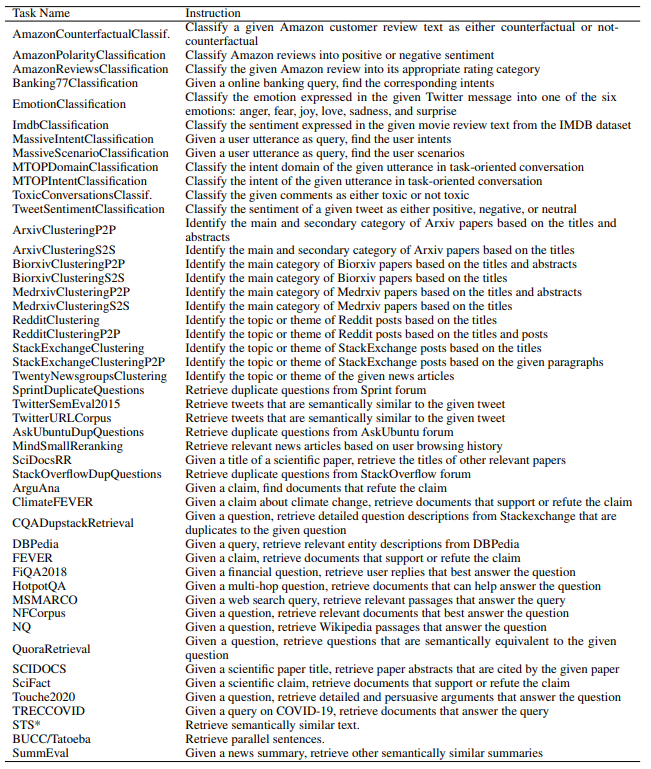

![Table 15: Results for each dataset in the MTEB benchmark. The evaluation metrics and detailed baseline results are available in the original paper [28].](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-eqf30n2.png)

This paper is available on arxiv under CC0 1.0 DEED license.