Authors:

(1) Liang Wang, Microsoft Corporation, and Correspondence to ([email protected]);

(2) Nan Yang, Microsoft Corporation, and correspondence to ([email protected]);

(3) Xiaolong Huang, Microsoft Corporation;

(4) Linjun Yang, Microsoft Corporation;

(5) Rangan Majumder, Microsoft Corporation;

(6) Furu Wei, Microsoft Corporation and Correspondence to ([email protected]).

Table of Links

3 Method

4 Experiments

4.1 Statistics of the Synthetic Data

4.2 Model Fine-tuning and Evaluation

5 Analysis

5.1 Is Contrastive Pre-training Necessary?

5.2 Extending to Long Text Embeddings and 5.3 Analysis of Training Hyperparameters

B Test Set Contamination Analysis

C Prompts for Synthetic Data Generation

D Instructions for Training and Evaluation

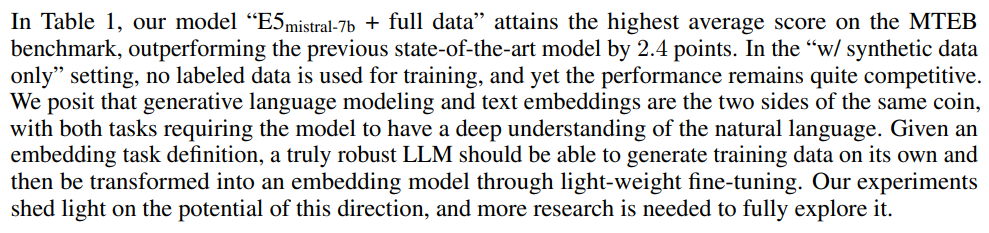

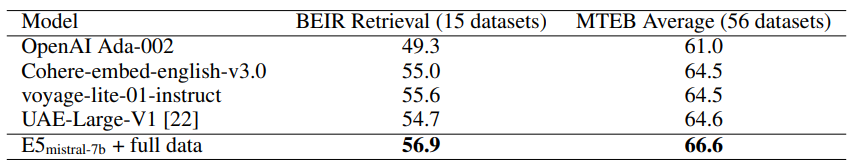

4.3 Main Results

![Table 1: Results on the MTEB benchmark [28] (56 datasets in the English subset). The numbers are averaged for each category. Please refer to Table 15 for the scores per dataset.](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-ms83003.png)

In Table 2, we also present a comparison with several commercial text embedding models. However, due to the lack of transparency and documentation about these models, a fair comparison is not feasible. We focus especially on the retrieval performance on the BEIR benchmark, since RAG is an emerging technique to enhance LLM with external knowledge and proprietary data. As Table 2 shows, our model outperforms the current commercial models by a significant margin.

This paper is available on arxiv under CC0 1.0 DEED license.